I-SplitEE Algorithm Reduces Neural Net Edge Compute Costs by 55% with Minimal Performance Impact

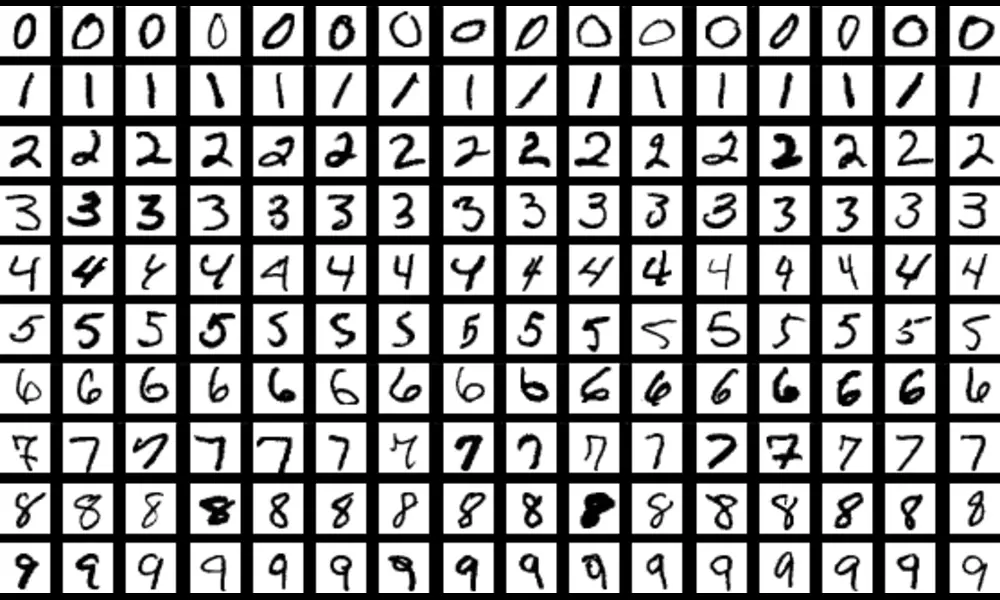

Published on Fri Feb 02 2024 File:MnistExamplesModified.png | Suvanjanprasai on Wikimedia

File:MnistExamplesModified.png | Suvanjanprasai on WikimediaDeep Neural Networks (DNNs) are a form of Artifical Intelligence (AI) frequently used for tasks like image classification, the challenge of deploying these computationally heavy networks on less-capable devices, such as smartphones and IoT gadgets, has become more pronounced. Enter the work of researchers from the Indian Institute of Technology Bombay, who have innovated a solution that could radically improve AI's efficiency on these devices. Their research, encapsulated in the paper "I-SplitEE: Image classification in Split Computing DNNs with Early Exits," introduces a groundbreaking approach that integrates early exits and split computing within DNNs. This technique not only promises to significantly cut down computational and communication costs but also ensures minimal impact on performance, even when faced with real-world challenges like varying environmental conditions affecting image quality.

The essence of their approach lies in strategically determining the "splitting layer" within a deep neural network. This is the point up to which computations are performed on an edge device (such as a mobile phone or IoT device) before considering whether to continue processing on the device or offload the task to the cloud for completion. By incorporating early exits — decision points within the network where a prediction can be made to avoid further computation — the method judiciously balances accuracy and efficiency. For instance, under certain conditions, it is feasible to make accurate predictions without processing the complete network, thereby saving precious computational resources.

A remarkable aspect of their work is the I-SplitEE algorithm, an online learning tool adept at navigating scenarios with no clear ground truth or sequential data. It dynamically adapts the splitting layer based on real-time data, enhancing the model's flexibility and effectiveness across varying conditions, which, as the researchers demonstrate, substantially reduces costs by at least 55% while maintaining performance degradation to a minimal 5%.

What sets this study apart is its relevance to everyday technology users and developers focusing on edge computing applications. By optimizing resource use on edge devices and the cloud, I-SplitEE not only makes it feasible to deploy sophisticated AI models on resource-constrained devices but also paves the way for more responsive and efficient AI applications that can operate under the bandwidth and latency constraints presented by mobile networks. This could revolutionize the way we interact with AI on our everyday devices, making services more accessible, faster, and cost-effective.

With technology continually pushing the boundaries of what's possible, the work of Bajpai, Jaiswal, and Hanawal exemplifies how innovative computational strategies can overcome the limitations of hardware to unlock new potentials for artificial intelligence, making it more adaptable and efficient in real-world applications. As the researchers suggest, future exploration could involve refining the algorithm to adapt not just the splitting layer but also the optimal thresholds for early exits based on the complexity of individual samples, further enhancing the model's accuracy and efficiency. This adaptive, intelligent approach to DNN deployment could mark a significant leap forward in the field of edge computing, bringing sophisticated AI capabilities to the very edge of the network.